TYPES OF REGRESSION TECHNIQUES A MACHINE LEARNER SHOULD KNOW

By - Nikhil Gupta

Introduction :

Linear and logistic regression are the algorithms that people learn in predictive modeling , due to their popularity , a lot of analysts even end up thinking that they are the only form of regressions & those who were slightly more involved , think that these two are more important among them.

The truth is , there are innumerable forms of regression analysis . Each of them has their own importance & are used where they are best suited .

Table of contents :

1. what is regression analysis ?

2.why do we use regression analysis ?

3. What are types of regression analysis techniques ?

4.How to select the right regression model ?

What is regression analysis ?

The Regression analysis is a form of predictive modelling which investigates the relation between a dependent (target) & an independent (s) (predictor) . This technique is used for forecasting the cause & effect relationship between the variables for eg, no of rash drivings & road accidents occuring due to them can be studied best through regression analysis .

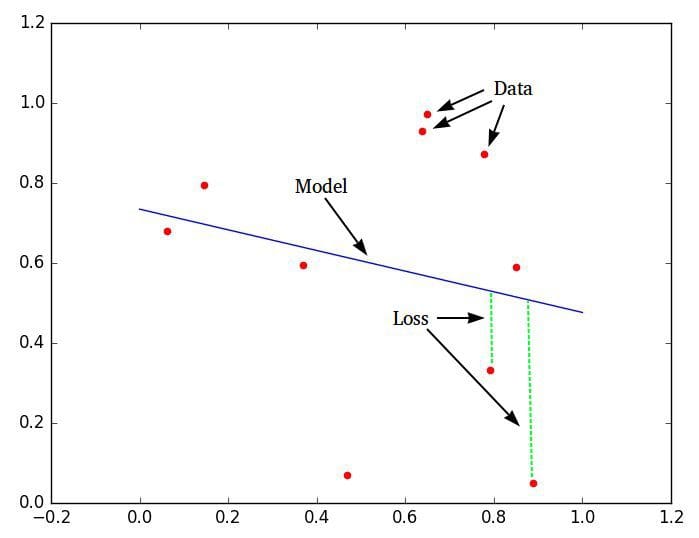

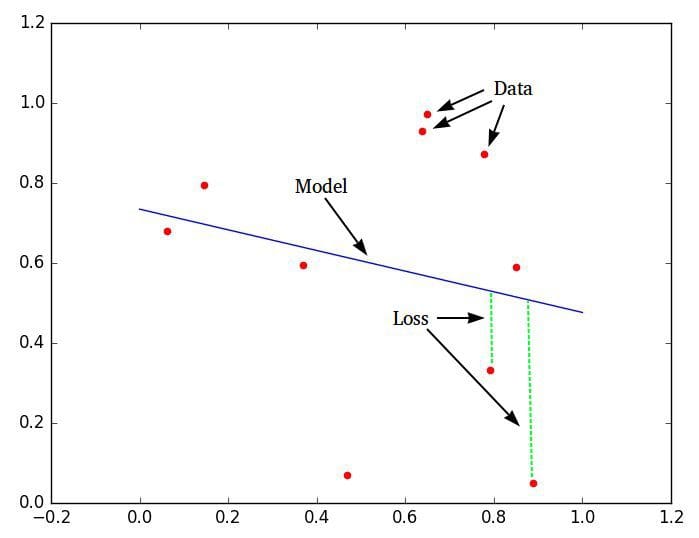

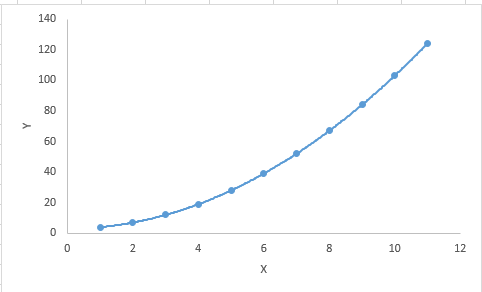

Regression analysis is an important tool for modeling and analyzing data . Here we fit a curve / line

to the data points in such a manner that the differences between the distance from the data points of the curve or the line is minimum.

Why do we use regression analysis ?

As mentioned , regression analysis estimates the relationship between the variables (two or more ).

There are multiple benefits of using Regression . They are :

- It indicates the significant relationship between the independent and dependent variables.

- It indicates the strength of impact of multiple independent variables and a dependent variable.

Regression analysis also allows us to compare the effects of variables measured on different scales, such as the effect of price changes and the number of promotional activities. These benefits help market researchers / data analysts / data scientists to eliminate and evaluate the best set of variables to be used for building predictive models.

What are types of regression techniques ?

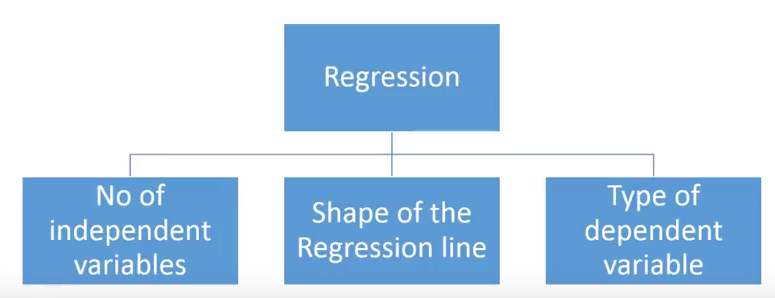

There are various types of regression techniques which are available to make predictions . These are mostly driven by the three metrics

- No. of independent variables

- Shape of Regression lines

- Types of dependent variables

the commonly used regression techniques are :

- Linear regression

- Logistic regression

- Polynomial regression

- Stepwise regression

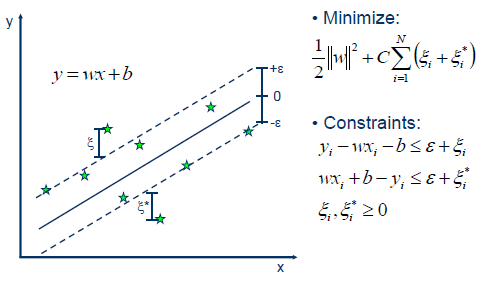

- Ridge regression

- Lasso regression

- Elastic net regression

1. Linear Regression

It is one of the most widely used regression techniques . It is among the few topics which is picked up

while learning predictive modeling . In this technique , the dependent variable is continuous however

the independent variable (s) can be continuous or discrete & the regression line is linear.

The linear regression technique establishes the relation between dependent(Y) and independent variables (X) using a best fit straight line (regression line )

It is represented by the equation

Y = a + (b*X)+e

where

a= intercept

b= slope

e= error term

The difference between simple linear regression and multiple linear regression is that, multiple linear regression has (>1) independent variables, whereas simple linear regression has only 1 independent variable.

Important Points:

- There must be linear relationship between independent and dependent variables

- Multiple regression suffers from multicollinearity, autocorrelation, heteroskedasticity.

- Linear Regression is very sensitive to Outliers. It can terribly affect the regression line and eventually the forecasted values.

- Multicollinearity can increase the variance of the coefficient estimates and make the estimates very sensitive to minor changes in the model. The result is that the coefficient estimates are unstable

- In case of multiple independent variables, we can go with forward selection, backward elimination and step wise approach for selection of most significant independent variables.

2. Polynomial Regression

A regression equation is a polynomial regression equation if the power of independent variable is more than 1. The equation below represents a polynomial equation:

Y=a+b*X^2

in this regression technique , the best fit is not a straight line , it is rather a curve

Important points :

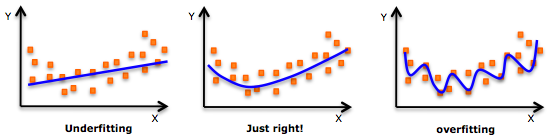

- While there might be a temptation to fit a higher degree polynomial to get lower error, this can result in over-fitting. Always plot the relationships to see the fit and focus on making sure that the curve fits the nature of the problem. Here is an example of how plotting can help:

- Especially look out for curve towards the ends and see whether those shapes and trends make sense. Higher polynomials can end up producing weird results on extrapolation.

Comments

Post a Comment